The prologue

At the Let’s Test conference last week, I attended a half-day tutorial on bug diagnosis by James Lyndsay, in which we tried to analyze the actions of testers when pinpointing bugs. We did all this by identifying our actions during some bug diagnosing exercises. My learnings kept lingering in the back of my mind throughout the conference (which was excellent, by the way). When I noticed on the way back home that something was wrong with the songs on my iPhone play-list, I decided to test my newly learned diagnosing-fu by describing my learnings in an exploratory essay while trying to find out what the problem really is.

At the time of writing, I don’t know what the cause of that problem is, yet. I will document my knowledge as it evolves (hopefully). So cover me, I’m going in…

The problem

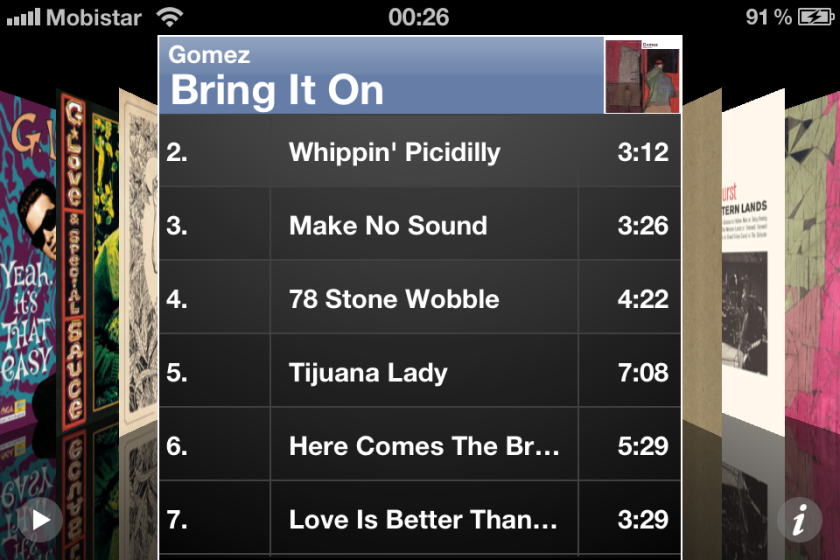

On the way from Stockholm to Brussels, at a cruising altitude of 10.000 km, the hostess tells us it is safe to turn all electronic devices back on. I whip out my phone and start flipping through the albums that were uploaded via a newly created play-list. I select an album and hit play. But something’s amiss – that familiar album sounds less familiar this time around. It takes a while before I realize that the album didn’t start with its opening song. Did I hit shuffle unknowingly, by any chance? That has happened before… Nope, shuffle is off. I go to the details of the album and notice that the first song is not there.

First probing

Strange, that. I initially dismiss it as a one-off, but the next album I listen to, the same phenomenon occurs. All songs are there but the opening one. I check the other albums and it turns out that half of the albums uploaded to my play-list lack their first song. If a bug is something that bugs a user, this must be a capital B one. Having to listen to incomplete albums seriously bugs me; what’s even more: it takes away my desire to listen.

[I notice how I react quite emotionally to the strange behavior. Emotions are a powerful oracle. Although I have only limited knowledge of the problem now, I declare it officially a bug]

Defocusing & narrowing down

I feel frustrated because further bug investigation possibilities on my phone seem limited. I put it away and decide to defocus. A little in-flight snack and some reading manage to temporarily distract me, but an hour later the bug creeps up on me again. I follow my energy that leads me to start a static analysis. Although the symptoms are all on display here, I suspect the cause is not located in my phone but rather within my PC, iTunes or in the synching between iTunes and the phone.

[I just narrowed down the scope of the investigation with a first broad hypothesis]

From possible to plausible

I haven’t got iTunes at my disposal right now, but while I am at it, I refine my previous hypothesis into a couple of more specific ones that I will be able to confirm or refute when I get home:

- The original source mp3-folders contain incomplete albums

- The albums were uploaded wrongly in my iTunes library

- The albums were copied wrongly into the play-list

- The albums were synchronized wrongly from the play-list to the phone

[This list of 4 contains possible causes]

I can narrow these down further because hypotheses 1 and 2 are highly unlikely. The very same songs, from the very same source folders were recently used in other play-lists without a problem, and I haven’t noticed songs missing from albums in my music library.

[This makes hypotheses 3 and 4 the most plausible ones – better concentrate on these]

Checking hypotheses

Back home, I am reunited with the family, and with my iTunes library that resides on an external storage drive (which also happens to contain the original mp3-files). I quickly check option 1 and 2, because I am painfully aware of biases in my memory, and my thinking. [ Although I think these two options are less likely, you never know. I’m a tester, and we know things can be different, right? Well, in this case, not so much]. The suspicious albums in the source folders are complete, as they are in the music library.

[I notice that, rather than checking all albums, I tend to focus on one sample album to check my assumptions. Comparing the same sample throughout the hypothesis increases consistency and diminishes possible distortions. A possible risk is of course that this could turn out to be a not-so representative example]

This leaves me with 3 and 4, the plausible ones.

Were the albums copied wrongly in the suspected play-list? I know they have been correctly copied to other play-lists before, so I am curious to see if this can really be the case.

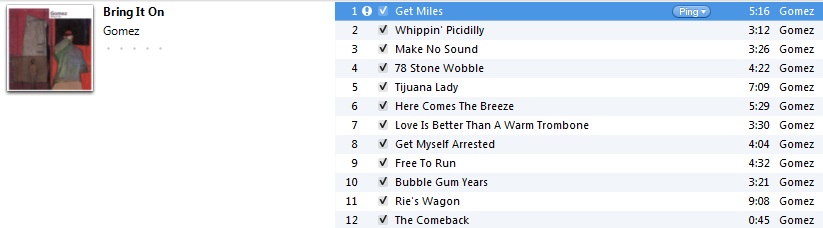

A-ha! Now we’re getting somewhere. Song number one, “Get Miles” got lost in the mists of the copy from library to play-list.

[I now come to realize that this was to be expected, since the synch process was designed to synch exactly what is in this play-list. Oh well, better be safe than sorry. This causes me to drop hypothesis 4, because the synch did exactly what is was supposed to do]

Reflecting & diving deeper

So, time-out for a second. What is happening? The contents of some albums were corrupted somewhere during the transfer to the play-list. First thing that strikes me: why only half of the albums? Why not all of them? They were all dragged to the play-list in the same session. Is there something I did differently for some albums? I recall that I started with importing individual albums into the library, but that I then resorted to a bulk import of the remaining albums. Maybe the “bulk-imported” albums are causing this? Then again, they are correctly loaded in the library, it is when they were transferred to the play-list that things went awry.

[While diving deeper within hypothesis 3, I develop a sub-hypothesis]

3. The albums were copied wrongly into the play-list

3.1. The problem with the play-list has something to do with bulk imports

I check hypothesis 3.1: I do a bulk import from several albums in one folder, and then transfer those to a newly created play-list. To no avail. I drag the suspicious album to a new play-list, but all 12 songs are there. I drag a couple of similar ones in there separately. Nothing wrong with them.

[This is not really working for me, and it starts to get boring. Let’s drag all of my available albums in, at the same time]

Bingo! Many albums there with the first songs missing. That was easy. Triumphantly, I clean the play-list and repeat the same action, to confirm.

[Repeating experiments can decrease uncertainty, but can also free us from the illusion of control]

Nothing. All back to normal again. Huh? What did I do exactly, that first time? I launch several attempts to reproduce what I had first seen, including starting from a new play-list from scratch, all of them unsuccessful. It takes a while before I realize that I just copied the contents from the faulty play-list into the new one.

[So I start making mistakes. Back to square one. I abort hypothesis 3.1. and decide to catch some sleep]

New perspectives

Another day, a fresh perspective. What else is striking about this bug? It occurs to me that the solution might lie in the fact that every single one of those missing songs is the first song on the album. What does the missing “number one” tell me?

- Order of play?

- Something that started wrong and then went well?

- Switching between albums?

- Corrupting the first song and switching albums?

- Switching from a bad to a good state?

[I am now focusing on the “why” of the first songs, whereas in the previous hypothesis I was focusing on the “why” of only half the albums]

Was there something I did that made the first songs go in a special state? Suddenly, I remember… I keep forgetting that I moved the iTunes library to an external drive, it used to be on my laptop until a month ago. That means that iTunes does not recognize the songs in my library as long as the external drive is not connected to my laptop. That is no problem, as long as I don’t perform any actions on the songs, like dragging them or playing them. Otherwise the songs get a lovely exclamation mark in front of them. I disconnect the external drive and try to play the first song of the album in the library. The trusted exclamation mark appears:

I find myself investigating a new sub-hypothesis of hypothesis no. 3:

3. The albums were copied wrongly into the play-list

3.1. The problem with the play-list has something to do with bulk imports

3.2. The problem has something to do with a disconnected library

Usually, the moment I notice I forgot to hook up the external drive, I quickly connect it and no harm is done. I wonder what would happen if I now connect the external drive again and drag the album to a new playlist in this state? [I have the feeling I’m nearly there. Could it really be…?]

I feel I am finally making some progress and refine 3.2 into 3.2.1.:

3. The albums were copied wrongly into the play-list

3.1. The problem with the play-list has something to do with bulk imports

3.2. The problem has something to do with a disconnected library

3.2.1. Actions performed on songs while being disconnected from the library cause the songs to be skipped when copying albums to play-lists, even when the library is connected again at the time of copying

I do the experiment, and it confirms my hypothesis. I repeat the same procedure with an other album and this time, the same behavior occurs.

[I was kind of hoping and expecting that this would happen, which is normal behavior, but which can also be a danger during testing. We tend to focus more on things we really *want* to see]

The Cause & the Trigger

This last discovery leaves me with some mixed emotions. I feel happy to know what caused the missing songs, but I am also puzzled as to why so many songs have received the exclamation mark without me noticing. I can perfectly reproduce the problem, and I’m pretty sure it won’t happen to me again, since I will now be aware of the little exclamation marks while making play-lists. I have found the cause of the strange behavior that kept me busy for quite a while, but still… I am not sure how it got triggered in the first place.

I do have a trigger hypothesis (for now): flipping through albums using the cover flow view and hitting enter or trying to play them while the library is not there only marks the first songs with an exclamation mark. I recall that tried to listen to some albums, but not the complete amount that had missing songs. So there is still a decent amount of mystery involved.

Epilogue – is it a bug, really?

When I was first confronted with the problem, I proclaimed this a bug with capital B, because it annoyed me – the user – and it made me stop using the product. Has my opinion changed now that I have lived with the thing for a couple of days? I would argue that it has.

The behavior only seems to occur in very specific situations, and although the impact was quite big for me, it is unlikely that it will happen to me again. Is there a possibility that others will stumble upon this? Well, I stumbled upon it, so chances are that others will do too. And I certainly think there are other people like me that have their libraries on other media that are not by default connected to their computers. So yes, I think it IS a bug, although not as severe as I initially thought it was. This goes to show that we adapt severity and priority to our gradually evolving knowledge about the bug, and to the changing context (Something Rob Sabourin neatly pointed out as well in his brilliant Let’s Test keynote).

What I got to know about the problem so far leads me to believe that the product (iTunes) can be improved in a couple of ways (actually, there are plenty of other ways of improving it, but I digress). How about the following ones, for starters:

- Doing a re-check of previously failed songs in case connectivity has been restored?

- Removing obsolete exclamation marks when an external library is re-connected?

- Adding a notification when trying to copy “songs not found” to playlists?

- Making it more conspicuous to the user when the music library is not connected?

This concludes my adventure that started on the way back from Let’s Test. I wrote this post in several stages as I was trying to get a grip on that devious bug. It didn’t turn out to be the “clean” or “clear” bug I hoped it to be. Perhaps the iTunes product managers will even say it’s cosmetic or trivial. After all, they make the call. Oh well. I learned valuable stuff in the process. I learned that wording/noting your thoughts in the process helps you to see where your line of reasoning is heading, and what the (sometimes hidden) hypotheses are. It was all about the journey[1] of course, and not so much about the eventual outcome (which I felt was only a partial success).

[1] Although it was a personal journey, it was inspired by James Lyndsay, who encouraged me to share my thoughts on diagnosing bugs